Installing HP Cloudsystem 9.0 - Part 7

This post deals with registering vCenter and activate a compute cluster so you can deploy instances and play with Openstack.

So, browse to the Operations Console Integrated Tools section

http://192.168.10.80/#/system/integrated_tools

Enter your vCenter details and Click Register

You will see this when completed

Clicking on the entry gives the following information and also allows you to update settings later should a password change etc

Now Click on the Compute Menu section and Compute Nodes. You will see your Clusters there and I'm ready to activate my Compute Cluster which has an Intel NUC in it built for this purpose. I did reset the networking as it was previously attached to my main Distributed vSwitch, I created a second DVS and it is now joined here. See the Admin guide on Page 123 onwards for guidance.

Go to vCenter and Manage each Compute host, go to the Configuration and edit the Security Profile / Firewall / inbound configuration to enable "VM serial port connected over network".

There is also an option of enabling console access from the Openstack console via VNC on ports 5900-6105 but it's a wide range and you probably won't give users access to the Openstack Console?

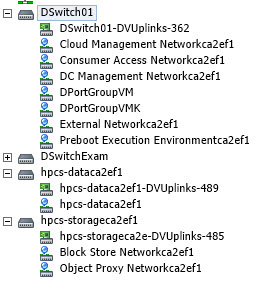

The screenshot above shows how my DVS configuration is looking currently. The Management Host uses DSwitch01 and the Compute Host DSwitch02. Now, I'm really excited about getting VM segmentation without having to use NSX so here is where we exploit the Open vSwitch vApp!

Go to the folder you extracted the CloudSystem 9.0 Tools to and you will see an OVF called "cs-ovsvapp.ova". Upload this to vCenter as follows:

So, when we activate the compute cluster this OVSvApp is automatically installed on each ESXi Host.

Note: I ran into a problem locating the OVSvApp on my Management cluster, it's just a cloning problem but to resolve I rebooted my vCenter to clear the Clone and deleted the Template, reimporting the OVF directly to my Compute Cluster.

Let's see if this is true.

I've selected the Cloud Cluster where my Intel NUC Compute Node is and you get an option to Activate. Double Check your networking and the relevent section in the Admin Guide and off we go!

You can specify vnmic interfaces here and have CS9 create the DVS or as I have do it yourself and tell CS9 where to go. Click Queue for Activation and then you can Click Complete Activiations for when you're doing many at a time I guess.

Boy, they aren't taking any chances! Click Confirm Activations when ready. You will see the Cluster Activating

And you will also see if Cloning your OVSvApp!

After the Clone / relocate issue I encountered I was unable to successfully re-activate the Compute Cluster. I got errors as follows:

Beginning activation checks (Cloud(labvc.lab.local)),

Verifying that no whitespace or special characters exists in the cluster name or its datacenter name while activating cluster Cloud,

Checking if controller(s) are reachable while activating the cluster Cloud,

Verifying cluster has at least one host,

Verifying cluster has at least one shared datastore,

Verifying that the cluster is enabled with DRS,

Checking for instances on the compute Cloud(labvc.lab.local),

Error: OVSvApp installation failed: (Exception),

Initializing OVSvApp installer configuration,

Running OVSvApp installation scripts

I also got:

Error: OVSvApp installation failed: Couldn't find the OVSvApp Template/Appliance (Exception),

I found one problem - when I dropped my compute host back to a standard vswitch and created a new DVS I forgot to check jumbo frames were enabled so my clone of the OVSvApp or anything else was getting stuck at 29%. Once that was fixed I rebooted vCenter and the Compute Host and tried again with the template attached and stored on the compute host:

Beginning activation checks (Cloud(labvc.lab.local)),

Verifying that no whitespace or special characters exists in the cluster name or its datacenter name while activating cluster Cloud,

Checking if controller(s) are reachable while activating the cluster Cloud,

Verifying cluster has at least one host,

Verifying cluster has at least one shared datastore,

Verifying that the cluster is enabled with DRS,

Checking for instances on the compute Cloud(labvc.lab.local),

Initializing OVSvApp installer configuration,

Running OVSvApp installation scripts,

Found an existing management DVS & DCM portgroup,OVSvApp has been created and configured successfully,Successfully created DVS,

Checking for VCN L2 agent status,

Deployed OVSvApp virtual machines. Status: [u' ovsvapp-compute.lab.local on the host compute.lab.local is up and running '],

Updating Host details for cluster Cloud,

Updating OVSvApp details in vCenter: labvc.lab.local,

Updating cluster details for nova.conf,

Waiting for the service to be detected by the Cloud controller,

Compute node: domain-c261(Cloud) successfully added in the list of hypervisors,

Ending Activation (Cloud(labvc.lab.local))

Phew!! I now have a slightly different network configuration, the OVSvApp has 4 NICs, 3 news ones on DSwitch02 but it also creates a new DVS with no physical uplinks?!

Now the NUC only has 2 x pCPU and the OVSvApp requires the following hardware:

I might try to edit the vCPU and reduce it to 2....Done and it appears to be ok...

The Activated Compute Nodes looks like this now in the Operations Console:

Tenant Networks are created in the Trunk DVS which then allows the OVSvApp to control who they can talk to and how. The 4 OVSvApp nics use the VMXNET3 driver which means no 1Gb E1000 bottlenecks! Lovely! Just check the Load Balancing Policy on these new Port Groups to ensure you're getting the best configuration in your environment.

I also just noticed on page 16-17 in the Troubleshooting guide they recommend removing two port groups when this happens (Failed first attempt at activation):

Manually remove the switches before retrying the compute activation action.

1. Using administrator credentials, log in to vCenter.

2. Select Inventory→Networking.

3. Right-click the Cloud-Data-Trunk-<cluster_name> distributed switch and select Remove.

4. Right-click the CS-OVS-Trunk-<cluster_name> distributed switch and select Remove.

5. Retry the activation action on the ESXi compute node.

That's all for now. I'll cover the command line tools in the next post but after that I'm stuck. I've been having difficulty deploying Windows images from Glance to ESXi. I had similar experiences using Openstack over a year ago but found ways around it. Back to the drawing board butwork is busy so it may be a while due to the labour intensiveness of booting the lab up and shutting it down, it's not a 5 minute quick check. If you find a solution post a comment!!!

Go to vCenter and Manage each Compute host, go to the Configuration and edit the Security Profile / Firewall / inbound configuration to enable "VM serial port connected over network".

There is also an option of enabling console access from the Openstack console via VNC on ports 5900-6105 but it's a wide range and you probably won't give users access to the Openstack Console?

The screenshot above shows how my DVS configuration is looking currently. The Management Host uses DSwitch01 and the Compute Host DSwitch02. Now, I'm really excited about getting VM segmentation without having to use NSX so here is where we exploit the Open vSwitch vApp!

Go to the folder you extracted the CloudSystem 9.0 Tools to and you will see an OVF called "cs-ovsvapp.ova". Upload this to vCenter as follows:

So, when we activate the compute cluster this OVSvApp is automatically installed on each ESXi Host.

Note: I ran into a problem locating the OVSvApp on my Management cluster, it's just a cloning problem but to resolve I rebooted my vCenter to clear the Clone and deleted the Template, reimporting the OVF directly to my Compute Cluster.

Let's see if this is true.

I've selected the Cloud Cluster where my Intel NUC Compute Node is and you get an option to Activate. Double Check your networking and the relevent section in the Admin Guide and off we go!

You can specify vnmic interfaces here and have CS9 create the DVS or as I have do it yourself and tell CS9 where to go. Click Queue for Activation and then you can Click Complete Activiations for when you're doing many at a time I guess.

Boy, they aren't taking any chances! Click Confirm Activations when ready. You will see the Cluster Activating

And you will also see if Cloning your OVSvApp!

After the Clone / relocate issue I encountered I was unable to successfully re-activate the Compute Cluster. I got errors as follows:

Beginning activation checks (Cloud(labvc.lab.local)),

Verifying that no whitespace or special characters exists in the cluster name or its datacenter name while activating cluster Cloud,

Checking if controller(s) are reachable while activating the cluster Cloud,

Verifying cluster has at least one host,

Verifying cluster has at least one shared datastore,

Verifying that the cluster is enabled with DRS,

Checking for instances on the compute Cloud(labvc.lab.local),

Error: OVSvApp installation failed: (Exception),

Initializing OVSvApp installer configuration,

Running OVSvApp installation scripts

I also got:

Error: OVSvApp installation failed: Couldn't find the OVSvApp Template/Appliance (Exception),

I found one problem - when I dropped my compute host back to a standard vswitch and created a new DVS I forgot to check jumbo frames were enabled so my clone of the OVSvApp or anything else was getting stuck at 29%. Once that was fixed I rebooted vCenter and the Compute Host and tried again with the template attached and stored on the compute host:

Beginning activation checks (Cloud(labvc.lab.local)),

Verifying that no whitespace or special characters exists in the cluster name or its datacenter name while activating cluster Cloud,

Checking if controller(s) are reachable while activating the cluster Cloud,

Verifying cluster has at least one host,

Verifying cluster has at least one shared datastore,

Verifying that the cluster is enabled with DRS,

Checking for instances on the compute Cloud(labvc.lab.local),

Initializing OVSvApp installer configuration,

Running OVSvApp installation scripts,

Found an existing management DVS & DCM portgroup,OVSvApp has been created and configured successfully,Successfully created DVS,

Checking for VCN L2 agent status,

Deployed OVSvApp virtual machines. Status: [u' ovsvapp-compute.lab.local on the host compute.lab.local is up and running '],

Updating Host details for cluster Cloud,

Updating OVSvApp details in vCenter: labvc.lab.local,

Updating cluster details for nova.conf,

Waiting for the service to be detected by the Cloud controller,

Compute node: domain-c261(Cloud) successfully added in the list of hypervisors,

Ending Activation (Cloud(labvc.lab.local))

Phew!! I now have a slightly different network configuration, the OVSvApp has 4 NICs, 3 news ones on DSwitch02 but it also creates a new DVS with no physical uplinks?!

Now the NUC only has 2 x pCPU and the OVSvApp requires the following hardware:

I might try to edit the vCPU and reduce it to 2....Done and it appears to be ok...

The Activated Compute Nodes looks like this now in the Operations Console:

Tenant Networks are created in the Trunk DVS which then allows the OVSvApp to control who they can talk to and how. The 4 OVSvApp nics use the VMXNET3 driver which means no 1Gb E1000 bottlenecks! Lovely! Just check the Load Balancing Policy on these new Port Groups to ensure you're getting the best configuration in your environment.

I also just noticed on page 16-17 in the Troubleshooting guide they recommend removing two port groups when this happens (Failed first attempt at activation):

Manually remove the switches before retrying the compute activation action.

1. Using administrator credentials, log in to vCenter.

2. Select Inventory→Networking.

3. Right-click the Cloud-Data-Trunk-<cluster_name> distributed switch and select Remove.

4. Right-click the CS-OVS-Trunk-<cluster_name> distributed switch and select Remove.

5. Retry the activation action on the ESXi compute node.

That's all for now. I'll cover the command line tools in the next post but after that I'm stuck. I've been having difficulty deploying Windows images from Glance to ESXi. I had similar experiences using Openstack over a year ago but found ways around it. Back to the drawing board butwork is busy so it may be a while due to the labour intensiveness of booting the lab up and shutting it down, it's not a 5 minute quick check. If you find a solution post a comment!!!